At WWDC 2019, the iOS Vision framework got a big upgrade. It now supports optical character recognition that can extract raw text from an image! An app can now easily add and take advantage of this capability. All the processing happens right on the device, which makes processing time really fast. Let’s look at how easy it is to do this in Xcode 11 and iOS 13.

Let’s assume I have a recipies app and I want to share one of my favorite recipes with other users. My recipe is in a book that I have at home. How cool would it be if instead of just posting an image of the recipe, I could extract the text. This would allow my recipe to be searchable by ingredient and would allow the text of the recipe to be more easily converted into a readable format.

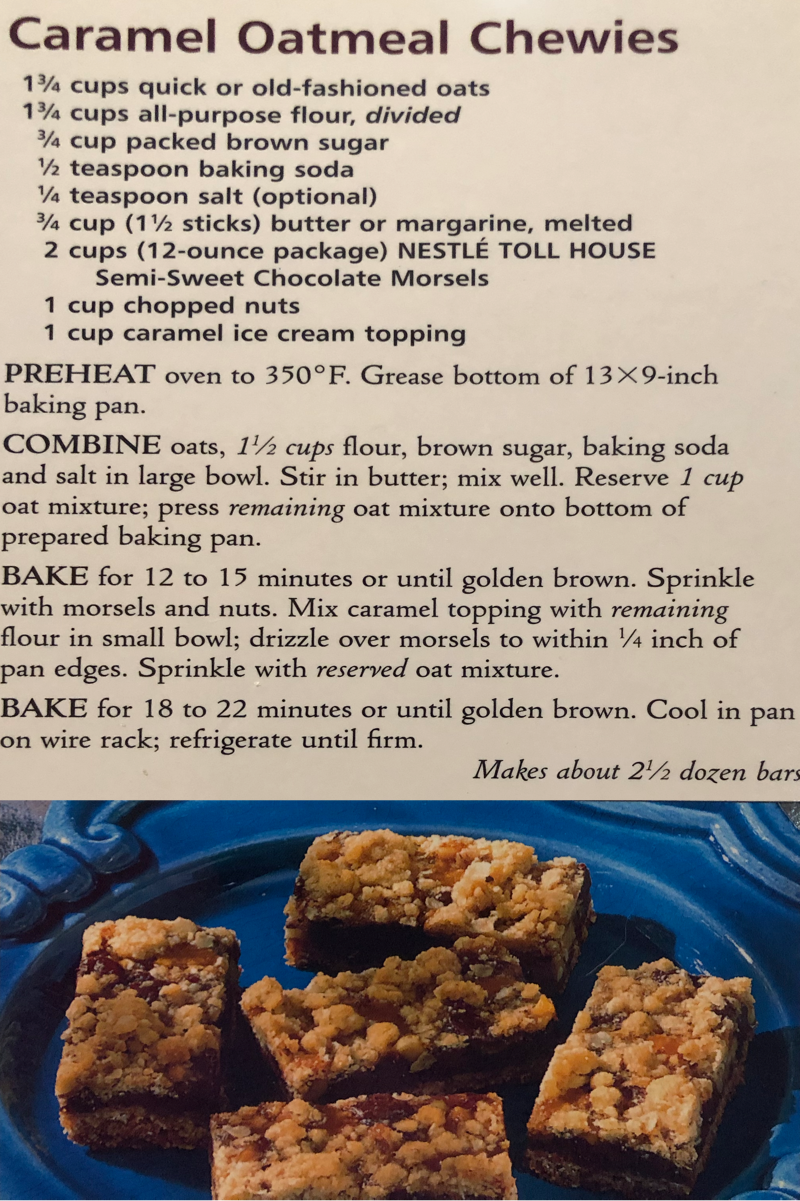

Take a look at the picture of my recipe. The quality is not horrible but not perfect either. Let’s see how we can use the vision framework to extract the text, and whether the output is accurate.

Using Xcode 11 with a minimum deployment target of iOS 13, let’s check out an example. In the following code, I am using the new Apple vision framework to turn my image into text. I have a TextView in a ViewController that I am going to use to display the text that the vision framework finds in the recipe.

The above code makes a VNRecognizeTextRequest, which is part of the vision framework. The result in this request gives recognized text observations. Thes are lines of text extracted from the image. For display purposes, I have added the line of text to the textView that will display in the simulator. Here is the resulting output from the recipe.

|

|

Pretty accurate! The recognizer did fail on some things like 13/4 instead of 1 3/4, but for the most part everything else was translated correctly. Notice also that the text is given to us by lines and therefore no formatting exists. This means the text won’t wrap properly without some additional logic to correct the formatting. From what I can tell, there is nothing in the vision framework that will help with formatting. Another library or my own logic would be needed to add it back.

There are a number of options that can be specified in the VNRecognizeTextRequest.

|

|

In my testing, a recognition level of fast returned almost instantly while a level of .accurate took about 3 seconds. In this image though, the .accurate method actually got things wrong that the fast method got correct. Because the fast method returns so quickly, it would be really good for use cases where the camera is in use and you want to have real time text recognition.

For cases where a large amount of text might take some time to parse, I can add a progress bar while execution is taking place on the background thread. The VNRecognizeTextRequest has a progress handler to do this:

|

|

Overall the vision framework is really cool and it’s exciting how easy it is to add to an app. I’m curious what developers will do with this in the future!

If you found this helpful, get in touch at https://atomicrobot.com/contact/ if you would like to work with us on your next project.