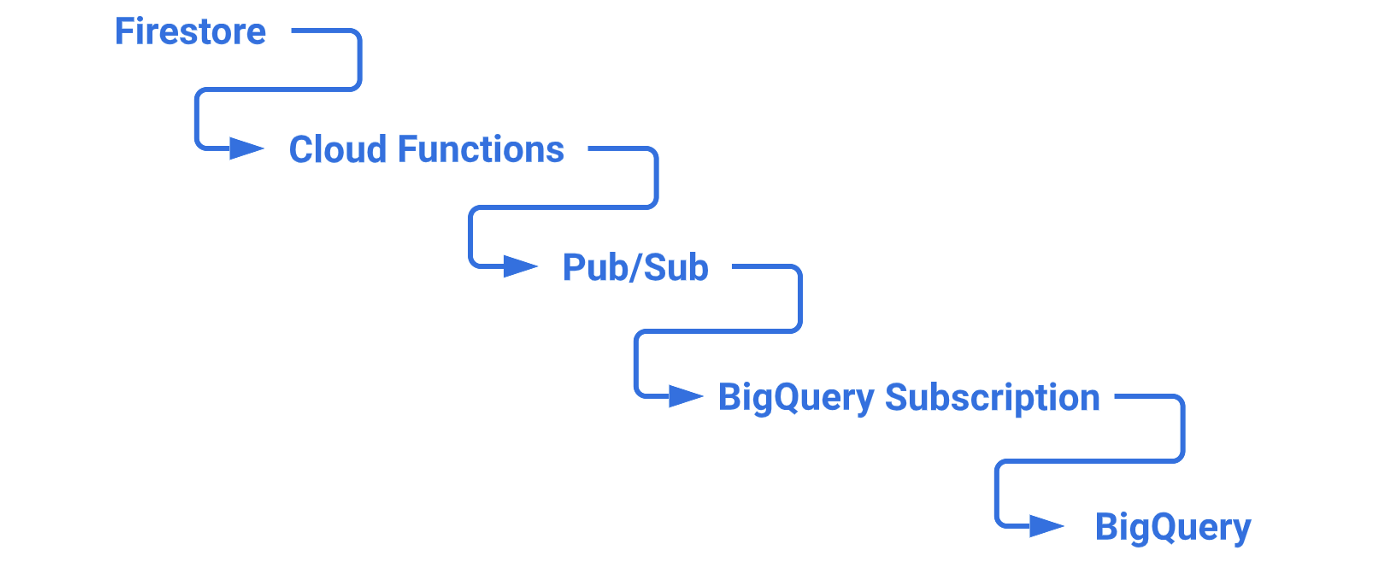

In our last blog post, we documented the synchronization of mobile app data from Firestore to BigQuery with the raw JSON placed in a data column of a BigQuery table. While that is a perfectly acceptable way of doing this, maybe the data you want to synchronize has a defined schema that will never, or at least rarely, change. In this case, it would be better to deconstruct the JSON data into various BigQuery table columns. So, let’s cover that now.

We will build off the following services enabled during the last blog:

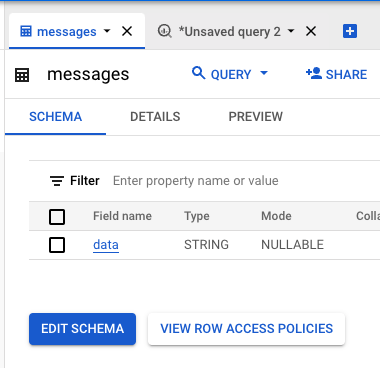

In GCP, go to BigQuery.

Select the messages table from the left navigation tree.

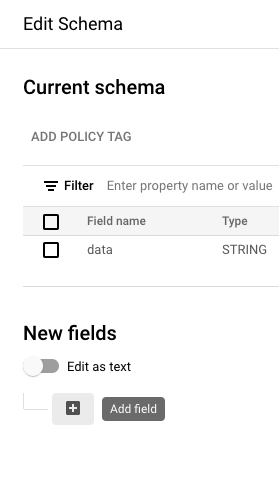

Click the EDIT SCHEMA button.

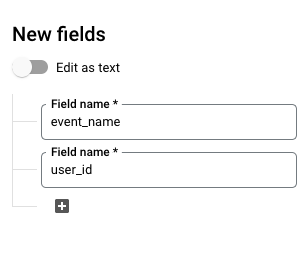

Click the Add Field button in New Fields section.

Type event_name.

Click the Add field button again.

Type user_id.

Click the SAVE button.

In GCP, go to PubSub.

Click on Schemas from the left navigation bar.

Click the CREATE SCHEMA button.

Use the following settings for the Schema:

messagePaste the following as the Schema definition:

|

|

Click the CREATE button.

Click on Topics from the left navigation bar.

Click CREATE TOPIC button.

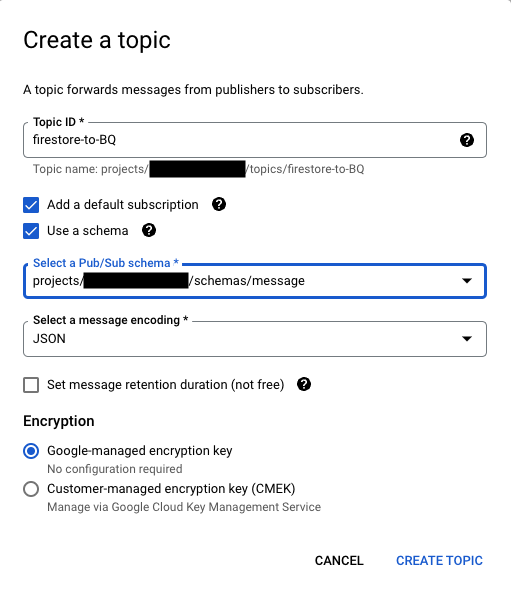

Use the following settings for the Topic:

firestore-to-BQ

Click CREATE TOPIC button.

Click EXPORT TO BIGQUERY button.

Click CONTINUE button.

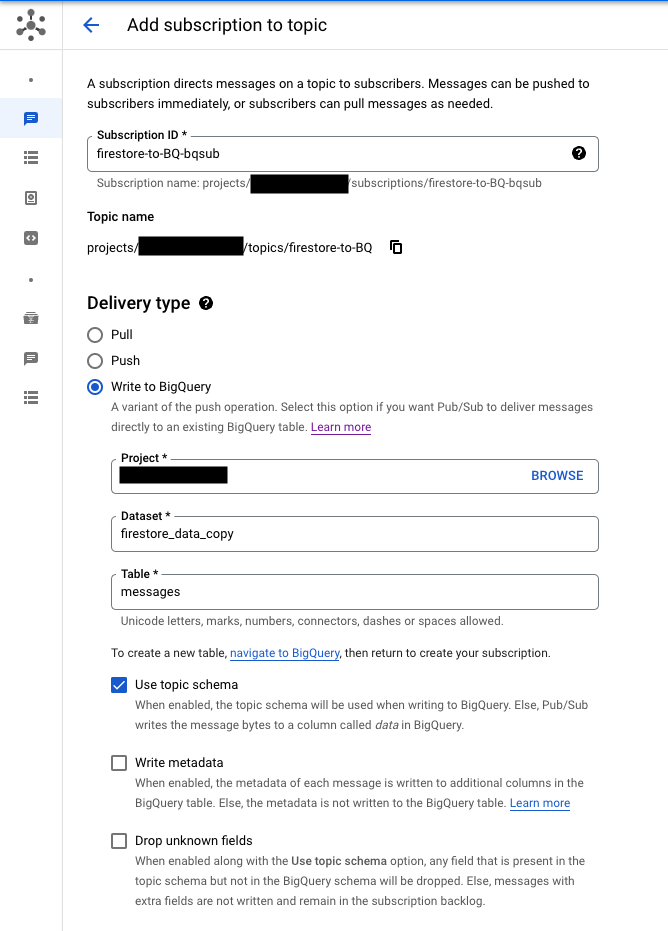

Use the following settings for the BigQuery Subscription:

firestore-to-BQ-bqsubmessages

Click CREATE button.

In GCP, go to Cloud Functions.

Click on copy-data-to-bigquery in list.

Note: We created this in the previous blog, but if you don’t have it, here are the settings to use:

copy-data-to-bigquerymessages/{documentId}Click Edit button.

Click Next button.

Copy the following code into index.js:

|

|

Copy the following code into package.json:

|

|

In GCP, go to Firestore.

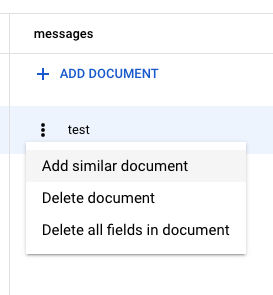

Click on three-dot icon for the test document in the messages collection.

Choose Add similar document from the popup menu.

Click the SAVE button.

In GCP, go to Logging > Logs Explorer.

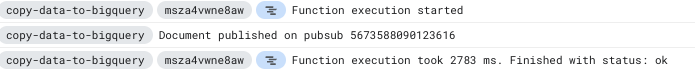

You should see the following:

In GCP, go to BigQuery.

Select messages table from the left navigation tree.

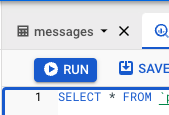

Click the Query drop-down button, and choose In new tab to add a new editor tab.

Type * between the words SELECT and FROM.

Click the Run button.

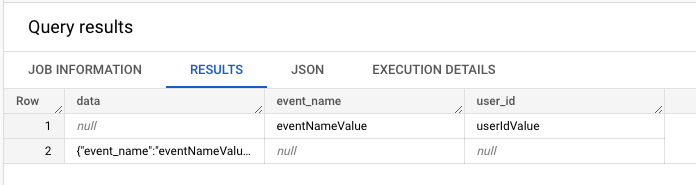

In the Query results section that appears, you should see the data you entered into Firestore

That’s pretty much it and as long as your schema doesn’t change, you’re good to go. But, currently, if you do need to change the schema, you will have to delete and recreate the Pub/Sub Topic, Schema and BigQuery Subscription.

Also, if the for loop at the beginning of the Cloud Function code gave you the chills, here’s a version of the code that gets the document data from Firestore. It’s a little cleaner and while it does have the added cost of hitting Firestore, it also deletes the document which keeps your Firestore tidy.

Thanks for reading and I hope this blog has helped you. Keep us in mind for all your mobile solution needs.